Transparency vs Interpretability

While we may not know how a model generates its result, we should still know what was asked of the model to get the result.

AI models, or more generally speaking, “algorithms”, are taking on more of the burden of decision making in our society. This isn’t altogether bad. As society grows in complexity, decisions require reviewing a lot more information and at a faster pace before “deciding” the next action. Computers are much better suited to review large amounts of information at sub-millisecond speeds.

However, there are downsides to be aware of as we progress further down this path. AI-decision making is new and nearly impossible to interpret. We don’t know how the model arrived at the decision it did. Ultimately, this leaves us in the dark about how we can effect a situation for our benefit.

AI Agents

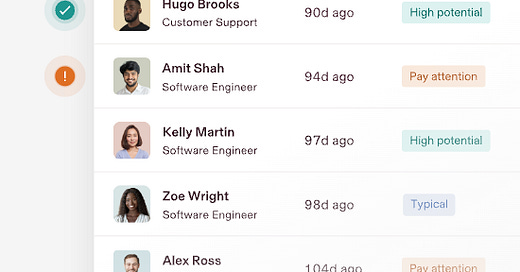

In the clip, Yuval describes a future state where there are millions of AI agents making decisions on our behalf. To showcase this more specifically, just this week (September 25, 2024), Rippling (a human resources information systems, HRIS, company) announced their new product, Talent Signal.

Talent Signal uses AI (currently the models from Anthropic and OpenAI) to review “work product” from engineers (code) and customer support employees (support tickets) to generate individual performance reviews. The AI is being used to say which employees have high potential and which need “management support”. While they still require managers to review the recommendation, the AI will play a significant role in how the employee is tracked for advancement or be part of the next RIF.

This is not the first decision support AI agent. AI co-pilots across many domains are starting to make their way to market (see legal, accounting, customer support, etc.) Co-pilots will progress into auto-pilots in the near future, whether intentionally or unintentionally. The line between “co-pilot” and “auto-pilot” is actually irrelevant.

Studies are showing how quickly people tend to trust the AI’s decision. “If the AI appears too high quality, workers are at risk of “falling asleep at the wheel" and mindlessly following its recommendations without deliberation.”

It does not matter if AI has the capabilities to execute actions that follow from the decision. If the human completes the actions or the AI does, the outcome will be the same. Especially if the human doesn’t stop to question the decision, which is becoming more normal.

Why would they question the decision they cannot determine how the AI made it’s decision?

Interpretability

“A surprising fact about modern large language models is that nobody really knows how they work internally.” - Anthropic Research Team

One could argue that AI models taking on the burden of decision making is better than the way it works today. People often neglect decisions that need to be made, make them too late, or too quickly before reviewing pertinent information.

In the case of Talent Signal, AI could eliminate bias a manager may have subconsciously towards an employee which could inflate or deflate the performance review. The AI could make it so performance reviews happen more frequently than they currently do.

However, there are downsides to this. Because we can’t fully understand how a model arrives at it’s decision, we can’t really know if it is making a fair or rational decision. When you break this down functionally, it’s really bizarre how this all works.

You provide as input to the model some text (code or support ticket logs)

The text gets encoded into “tokens”, eg. 1 word = 1 token. Tokens are then “translated” into a set of numbers in the model’s universe.

The model takes the set of input "numbers” and finds closely matched other numbers which are tokens that could follow the tokens provided.

Each token is compared to the input and the context tokens to see how well it follows, the “best” is chosen.

Best is somehow determined based on how and what the model was trained on.

The process continues until the last token is produced or a cap is reached

The model then converts the tokens it chose back into the same language as the input.

Steps 3-5 are essentially a mystery. Yes, the engineers that built the model setup a handful of mathematical operations in there to get the model to make reasonable choices for the next token, but still the process is a mystery. The model creates a universe of its own, and each token has a defined place within the universe, coordinates if you will. How the model gets from one set of tokens to another is not something we really understand.

It might seem like we do because we are interacting using natural language. However, just because we interact with an LLM through english language, doesn’t mean we know why it is saying what we are reading. This is mostly just the model giving us information back in a way we can read it. It could just as easily spew out a set of numbers which we would have no idea what to do with.

Chain of Thought?

A recent advancement is in an area of research called, “chain of thought” reasoning. In this process, the model asks itself how to breakdown the request from the user into multiple steps and then answer each step. It uses its answers from each step to then come up with answers for the next step.

It does this all over 1000s or more iterations so it can have stronger confidence in the overall result.

Chain of thought reasoning is a step in the direction of interpretability because now we can see how the model is breaking down its tasks into multiple steps. It can share the chain of thought with us to see if we agree with the steps and the results at each step.

The Argument for Transparency

Without true interpretability of AI models, we must demand transparency. Otherwise we will be forced to live with decisions made opaquely. We have no idea what outcomes are being optimized for, who those outcomes favor, and who those outcomes affect (directly or indirectly).

If an AI model is going to review my work performance, I would rather know what it is basing my review on. Am I going to miss out on a promotion because I prefer tabs not spaces in my code? Is the code-specialized-AI-model’s training based on the code of the best programmers or just the consensus of code written and publicly available? What if my code is non-consensus because it is optimal compared to current best practices? Hence an advantage for the application?

If I’m a customer service rep, what if I’m following the company policies but getting bad reviews because I’m not doing what customers ask when its against company policy? What if I’m violating company policy but doing what customer’s ask and therefore getting positive reviews?

Yes, currently these nuances are meant to be handled by competent managers. They are meant to use the AI performance review as 1 input among many. But we can’t assume there are only competent managers. There will be managers (likely many many) that will just blindly follow the AI opinion and enact decisions using the AI’s output as root cause.

Even if we switch over to a chain of thought based model, it is not always the case that those model providers share the thought trace of the model. As of today, Open AI’s o1 model API keeps the chains of thought hidden from the users (even though they are being charged for each chain generated).

“Therefore, after weighing multiple factors including user experience, competitive advantage, and the option to pursue the chain of thought monitoring, we have decided not to show the raw chains of thought to users.”

Open Prompts & Collaboration

This post is not about halting progress on AI or the deployment of AI to ease decision making burdens. We do need these tools to drive our economy forward and faster. We can’t rewind time to when things were less complex and we had less information to go on.

However, we can proceed with through transparency. Rather than having the AI work in the dark, behind the scenes, the prompts and system prompts provided to the model could be made visible.

For Talent Signal, companies could share with their teams the traits that are valued, the traits that are not, and any other instructions given to the model. The prompts are not just inputs to the AI models, they are in essence, the shared values of what the company deems are critical to success…for the company and for the employees working there. It’d be better still if each department or team defined what they determine as meeting the mark for the current goal. The prompts would get updated and everyone who have some say in how the work product is being judged, free from manager bias. Employees will ultimately have more control over their own performance review because of their work and they will know ahead of time what constitutes “great” vs “poor”.

Verifiable AI would then bind these promises together. Employees and Managers collaborate to determine what great work is and capture the definitions in prompts for a credible, capable AI model. With Verifiable model, after it generates a result, anyone reviewing the output can verify that the prompts were not changed and the model executed the prompts as given.

For high stakes use cases, like when someone’s job or career trajectory is on the line, it is important to build trust in the systems that are making decisions. That trust is much stronger when it is easily verifiable.